|

Multimedia involves the use of multiple forms of communication media in an interactive and integrated manner. In current multimedia systems the interactivity is predominantly derived from the textual information, rather then other media such as images, video or audio. Multimedia is unlikely to achieve its full potential until all media can actively support the full level of functionality expected - including visual information. Part of the process of achieving this goal is to improve current techniques for authoring these additional media in more sophisticated ways. This paper looks at current research into the authoring of visual information and ways in which this information can be more tightly integrated into multimedia applications.

The few minor exceptions to the lack of visual information interactivity predominantly revolve around hand crafted small scale applications. These applications are however sufficient to illustrate the significantly enhanced functionality which can be achieved with active visual information. For a good example of this, look at the map images in the Virtual Tourist on http://wings.buffalo.edu/world/ [see http://www.virtualtourist.com/]

These small scale applications have tended to be handcrafted using low level tools (such as MapEdit - a shareware Unix tool) and contain hard coded static syntactic descriptions of the image data. Indeed, we are not truly interacting with the visual information, but rather interacting with an underlying syntactic description of the image (which provides us with the illusion of interaction with the visual information).

We can understand the poor use which is made of visual information better by considering the use of textual data within multimedia, an area that has received (and continues to receive) considerable research attention. The text can be stored, analysed, manipulated, and generated synthetically. Essentially the text can be treated as consisting of lexical components or discrete entities - hypercomponents - (words, sentences, paragraphs, etc.) which obey a series of syntactic and semantic rules describing the inter-relationships (Ginige, 1995). Within the multimedia application these hypercomponents can be used to create nodes, anchors, links, etc. These elements provide the navigation functionality which supplies the interactivity - the core of any multimedia application. A growing number of authoring tools exist which assist in the conversion of textual information into an appropriate structure (Robertson, 1994).

The evolution of visual information is still at a much lower level, and is predominantly treated as a passive media. Visual information in its raw form is highly unstructured and yet very commonplace (documentary video tapes, image and photographic collections and databases, etc). Many visual information applications (such as medical imaging, robotics, and interactive multimedia) make use of the visual information in a highly structured format. Conceptually visual information can be treated in the same way as text; consisting of discrete entities which obey certain syntactic rules. The primary difficulty however lies in identifying these entities and the associated rules, and then interpreting these. When this is achieved visual information can become an active media as powerful (and in many cases, more so) than textual information. It is this premise which has driven this work.

Although there has been considerable work on structuring textual information, there has been minimal work performed on structuring the visual information to suit multimedia applications. For example, the development of practical multimedia systems requires the use of both suitable information and the appropriate structuring of this information. This information structuring is critical to the development of high quality visual information applications. One of the major obstacles hindering the advancement and commercial acceptance of these applications is the cost of structuring the vast amount of visual information. At present most existing visual information databases have been handcrafted - using the human operator to perform the lexical decomposition of the visual information; a process which is excessively expensive and time consuming.

This paper looks at current research into the authoring of visual information and ways in which this information can be more tightly integrated into multimedia applications. Current techniques range from completely manual markup of the visual information (a process which is excessively expensive and time consuming for large databases) to the use of object recognition schemes (which is only practical for highly constrained problems). We describe a compromise between these extremes - providing intelligent assistance to the author in the identification of prospective syntactic components of the visual information.

One of the key elements of any multimedia application is interactivity - the ability to interact with the media in such a way that improves the accessibility, useability and presentation of the information. The level of interactivity (along with many related factors, such as the appropriateness of the links) will greatly affect the overall success of the application. The degree to which we achieve interactivity will be strongly related to the way in which we model and analyse each of the media.

We can identify two main factors which influence our ability to achieve appropriate interactivity. The first is the ability to structure the information in such a way that appropriate sections of the information can be specified and utilised - eg. the identification of key words or phrases in textual information. The second factor is our own ability to interpret and utilise this information - eg. how easily we can locate a specific textual phrase from a page of text. We will discuss both of these factors.

Forms of electronic information such as text, numerical data, etc. are highly structured. Ibis structure (or more correctly, the ability to identify this structure) is typically critical to the effective use of this information in supporting interactivity. For example, in multimedia systems text is typically stored, analysed, displayed, manipulated, etc. In order to achieve this, applications (or authoring packages) typically structure the textual information into 'nodes' (where each node is typically information on a specific topic). Each node consists of discrete entities (words, sentences, paragraphs etc). Appropriate entities can be used as specialised components. For example, a word can act as an anchor point for links to other nodes (eg. if the user clicks the mouse on the word, the link is traversed, and the destination node is displayed). The effective use of this textual information relies strongly on the ability to make explicit this inherent structure of the textual information. This is also true for most alternative uses of similar information.

Recent authoring frameworks have begun to make this structure more explicit. For example, our own work on MATILDA (Lowe, 1996) separates the information domain from the application domain, and then within the information domain makes explicit the various levels of information structure. These levels include lexical (ie. identification of information components), syntactic (structural relationships between these components), and semantic (meaning based relationships between the components).

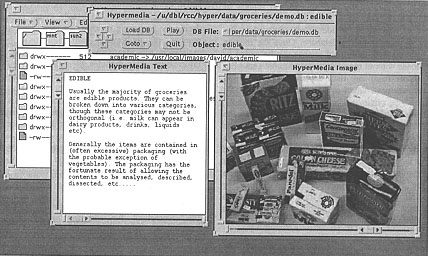

Despite these advances in the concepts of media structuring, the evolution of visual information (such as images and video) is still at a much lower level. In general it is either poorly structured, or the structure is poorly understood. Current techniques are relatively primitive in terms of their ability to make the structure of visual information explicit. As a result, in traditional multimedia systems visual information is predominantly treated as a passive media - essentially acting as an annotation to the textual information. This is illustrated in Figure 1.

Figure 1: Typical linking of information in a multimedia application: The links are restricted to the textual information, and other forms of information act as annotations to the text.

A number of (increasingly sophisticated) tools exist which assist in the process of structuring the raw textual information into a form suitable for multimedia. This typically requires the partitioning of the information into appropriate nodes, the identification of the hypercomponents, and specifying appropriate .links. Recent authoring tools, such as HART (Robertson, 1994) have begun to focus on providing a greater degree of support for the author during this process. Support is typically provided through both procedural guidance (assisting in the process of converting the information) and intelligent assistance (providing context dependant choices for the author - such as identifying and suggesting appropriate keywords from the text). To date, these tools still focus almost entirely on textual information. At present there is minimal support for assisting the authoring process of visual information. By improving this support the development of visual information applications becomes much simpler, and available to a much larger audience.

If we consider the critical aspect of image structuring, then we can identify several approaches. We will briefly discuss a few of these before considering what we see as an acceptable compromise given the current technological limits.

Figure 2: Multimedia application incorporating active visual information: The visual information elements are components which can be used for linking in the same way as the textual components.

Figure 3: A screen dump from MapEdit - a shareware Unix program which allows manual markup of images for use on the WWW. The cursor is used to draw specific shapes around objects within the image, and a resultant text map file is written containing the markup.

This approach has several significant problems. Firstly it means that we are actually interacting with a map file, rather then directly with the image (though this does provide the illusion of interaction with the image). This means that, since we are not interacting with the image, we have the additional problem of ensuring that we maintain consistency between the image and the map. Any changes to the image must be reflected in changes to the map file. More significantly, the effort required to manually markup images will be excessive in all but the simplest cases. This situation will be significantly exacerbated in the case of video information where a typical 10 minute video might contain 18,000 frames. At present this has meant that only the simplest cases of visual information has been marked up, and typically only in small isolated cases. Nevertheless, this work does indicate the validity and improved functionality which can be obtained from the use of active visual information.

Application description

The application we developed, although very simplistic in comparison to existing multimedia applications, demonstrates one possible technique for enabling the use of active image data rather than passive image data, as well as providing a platform for investigating the general performance and change in functionality. No attempt was made to create a commercial quality application, as the primary aim was to investigate the implications of the imaging principles involved, rather than the various multimedia principles. As a result the overall operation and performance of the system from a multimedia point of view is quite simplistic. Only sufficient functionality to investigate the image algorithms was incorporated. Development and use of this application are detailed elsewhere (Lowe, 1993).

The recognition process which was used (Lowe 1992) formed the central core of the multimedia application. In previous work (Lowe 1990) a representation was developed which decomposed an image into a number of hierarchical information layers. These layers contained image features such as edge information (and other primary image discontinuities), texture, shading, and colour. These layers of information were used to perform an object recognition scheme based on hypotheses generated from feature matches. The application was developed on a Sun SPARCstation running OpenWindows version 3 with an X-Windows interface, and was coded in such a way as to make the user interface as simple and logical as possible. The images are processed offline to extract the information hierarchy. They are then loaded in when required for a particular database element. The display routines decode this data and regenerate the images progressively so that the user has an indication of the image content as early as possible. If the user then selects an object within the image (by clicking on it with the mouse) then the recognition scheme will attempt to recognise the object using the information representation rather than the having to regenerate the canonical image. If the recognition is successful the application will jump to an appropriate database element (ie. image, text and audio that is relevant to the selected object).

Figure 4 is a snapshot of the screen containing the multimedia application. The user has just moved the mouse over an object in the image and pressed the left mouse button. The object was identified and the appropriate link will be subsequently traced. This application did not attempt to address any of the broader issues of information handling, database management, etc. The sole purpose was to investigate one method for automating the process of generating active image information in a multimedia application, and to then consider the implications of this in terms of the effective change in the application functionality.

Figure 4: Snapshot of screen containing the HyperImages application: A link which has an object from within an image as an anchor has just been triggered and is about to be traversed.

Results of using object recognition

After the application was developed and a typical database installed, the system was evaluated. This evaluation included both a consideration of authoring issues, and useability issues. The useability encompassed both a subjective users point of view, as well as quantitative measurements of the performance. The process of authoring the database required the author to manually generate the models used for the object recognition. Once this step was completed the author did not need to consider the visual information again. The application which was developed handled the creation of automatic links. The most difficult part of the authoring process was therefore the generation of the object models. This required the specification of a wireframe model of the object and detailing the surface shading, colour and texture. It was found that for this approach to be of practical use, this process would need to be automated. The model specification required a comparable effort to the manual identification of the objects (ie manual authoring). The primary difference between a manual authoring approach and this automated approach is related to ongoing effort. The effort required is related to the number of objects to be identified, rather than the number of images in the database. Once the models have been created, additional images can be added to the database without requiring any additional authoring effort (apart from any data capture and conversion which is required).

Once the application was completed and the demonstration database installed a number of people were asked to use the system and comment on its useability. The most significant conclusion drawn from this was the effectiveness of the improved functionality of the resultant system. In general the users seemed to be quite impressed with the general idea of active image data. In general the users found it much easier to locate and select an object within an image, than a word within a document.

Using object recognition for achieving active image data

Although the application which was developed had a high level of success, this was rather artificial. It certainly illustrated the appropriateness of using active visual information. However it should be recognised that the visual information within the application was limited to relatively simple objects. Every object was rectilinear, relatively simple in shape, and had a simple shading, texture, and colour. It was only because the objects were so simple, that the object recognition scheme had such success in correctly identifying objects.

For an object recognition scheme to be effective in implementing active visual information in multimedia it needs to satisfy at least two criteria. Firstly it must be robust, reliable, and consistent for a very wide range of applications and objects. Secondly, it must be very straightforward to expand the object database which it uses to identify objects. The object recognition scheme used for HyperImage satisfies neither of these criteria. Much research is occurring in the field of computer vision, and great success has been achieved in restricted application domains and for restricted image sets. Nevertheless, a general object recognition scheme which could handle the broad range of visual data present in multimedia applications is likely to be a considerable distance off. In the long term, object recognition will become increasingly important in multimedia authoring and multimedia applications (as has been foreshadowed by experiments such as HyperImage). In the shorter term however, object recognition would appear to be insufficiently mature, except in perhaps very isolated cases.

Having accepted that object recognition is likely to be too impractical for use for general multimedia applications, we need to consider possible alternatives. As was discussed above, information structuring can typically include lexical, syntactic, and semantic structuring. The lowest level of this - lexical structuring - involves identifying the lexical elements within the media. This does not require knowledge of the meaning of the information, or even true object recognition. Lexical decomposition is predominantly a segmentation problem - where we wish to identify things such as regions of images and boundaries between scenes in videos. Considering this view, and recognising that we cannot completely automate the authoring process, we can look at the possibility of semi-automating the process.

In order to provide an effective analysis of the visual information in multimedia, we can either restrict the visual information - which is what we would need to do if we were using object recognition, but is impractical for general authoring applications - or restrict the analysis which we are performing. Authoring assistance can be provided by combining appropriate analysis tools with the interaction of the author to guide the analysis. For example, assistance can be provided by using analysis tools (such as segmentation) to assist the multimedia author in identifying possible objects. The author will interact with the analysis tools, guiding them where necessary, but providing the necessary control. The analysis tools are used essentially to provide assistance to the author, rather than performing the entire authoring process. The authors current research is following this path - investigating methods of using image analysis tools to semi-automate the authoring process which has previously been performed by hand.

The most substantial example of similar research is the QBIC project, detailed on the QBIC WWW home page http://wwwqbic.almaden.ibm.com/ where image analysis tools have been used to support image retrieval based on image content. The QBIC system includes various tools which can be used to identify regions of images. For example, a drawing tool allows the user to select a region of an image and then have the drawn line snap to the nearest boundary (using snakes). Although these tools are not being used for multimedia authoring of visual information (rather they focus on image databases), they have illustrated the power of image analysis tools in visual information handling.

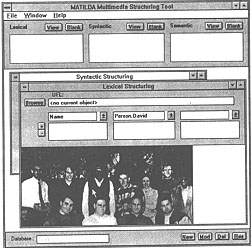

We are currently investigating the inclusion of similar tools into a multimedia authoring system. Figure 5 shows the MATILDA information structuring package with an image loaded into the lexical structuring tool (The MATILDA information structuring system, and how these tools are used in the authoring process, is described in a companion paper in the same proceedings as this paper). At present the tool is still being developed. Over time we intend to incorporate progressively more sophisticated tools, providing an enhanced level of functionality to the authoring of visual information.

Figure 5:We are currently investigating the inclusion into our prototype MATILDA information structuring tool a lexical structuring tool for image data. This tool will provide progressively more sophisticated segmentation and region identification assistance.

The implementation of the active image media is the first phase of what the authors see as the development of the ability to treat all media generically. Thus the application becomes independent of the particular media type. Each media type allows static and dynamic links from its various semantic components, contextual analysis etc. - the range of options now available with textual media. This research has tried to begin the work on treating image data in the same fashion as textual data.

Aloimonos, L. (1988). Visual shape computation. Proceedings of the IEEE, 76(8), 899-916, Aug.

Constantopoulos, P., Drakopoulos, J. and Yeorgaroudakis, Y. (1991). Retrieval of multimedia documents by pictorial content: A prototype system. International Conference on Multimedia Information Systems, ACM and ISS, McGraw Hill, 1991, pp.35-48.

Edgar, T. H., Steffen, C. V. and Newman, D. A. (1992). Digital storage of image and video sequences for interactive media integration applications: A technical review. In Promaco Conventions (Ed.), Proceedings of the International Interactive Multimedia Symposium, 279-284. Perth, Western Australia, 27-31 January. Promaco Conventions. http://www.aset.org.au/confs/iims/1992/edgar1.html

Ginige, A., Lowe, D. and Robertson, J. (1995). Hypermedia authoring. IEEE Multimedia, Winter 1995.

Lowe, D. and Ginige, A. (1990). A hierarchical structure for spatial domain coding of video images. The Australian Video Communications Workshop, Melbourne, Australia, July 1990, pp 195-203.

Lowe, D. B. (1992). Image Representation via Information Decomposition. PhD Thesis, School of Electrical Engineering, University of Technology, Sydney, December 1992.

Lowe, D. B. and Ginige, A. (1993). The use of object recognition in multimedia. Image and Vision Computing NZ '93, Auckland, New Zealand, August 16-18, 1993.

Lowe, D. B. and Ginige A. (1996). MATILDA: A framework for the representation and processing of information in multimedia systems. In C. McBeath and R. Atkinson (Eds), Proceedings of the Third International Interactive Multimedia Symposium, 229-236. Perth, Western Australia, 21-25 January. Promaco Conventions. http://www.aset.org.au/confs/iims/1996/lp/lowe2.html

Robertson, J., Merkus, E. and Ginige, A. (1994). The Hypermedia Research Toolkit (HART). European Conference on Hypertext '94, UK, September 1994.

Wong, C. Y. (1992). Research directions in hypermedia. In Promaco Conventions (Ed), Proceedings of the International Interactive Multimedia Symposium, 299-310. Perth, Western Australia, 27-31 January. Promaco Conventions. http://www.aset.org.au/confs/iims/1992/wong.html

Wu, L. J. (1984). Image coding using visual modelling and composite sources. International conference on Digital Signal Processing, Florence, pp.492-497, September 1984.

| Authors: Dr David Lowe Senior Lecturer, Computer Systems Engineering University of Technology, Sydney PO Box 123, Broadway NSW 2007, Australia Tel: +61 2 330 2526 Fax: +612 330 2435 Email: dbl@ee.uts.edu.au WWW: http://www.ee.uts.edu.au/~dbl

Associate Prof. Athula Ginige Please cite as: Lowe, D. B. and Ginige, A. (1996). Authoring of visual information in multimedia. In C. McBeath and R. Atkinson (Eds), Proceedings of the Third International Interactive Multimedia Symposium, 221-228. Perth, Western Australia, 21-25 January. Promaco Conventions. http://www.aset.org.au/confs/iims/1996/lp/lowe1.html |